Writing Better Tests

To write better tests, we first need to remember why we’re writing tests.

The obvious answer, and the traditional narrative, is to prevent regressions. We write tests to verify the functionality of our code. Then, if we change the code and introduce a bug, we know about it.

It’s hard to argue with this; Tests definitely do find bugs. But from my experience, tests need to be updated more often than they find bugs. If this is the only reason that we write tests, it probably isn't worth it.

So why do we write tests?

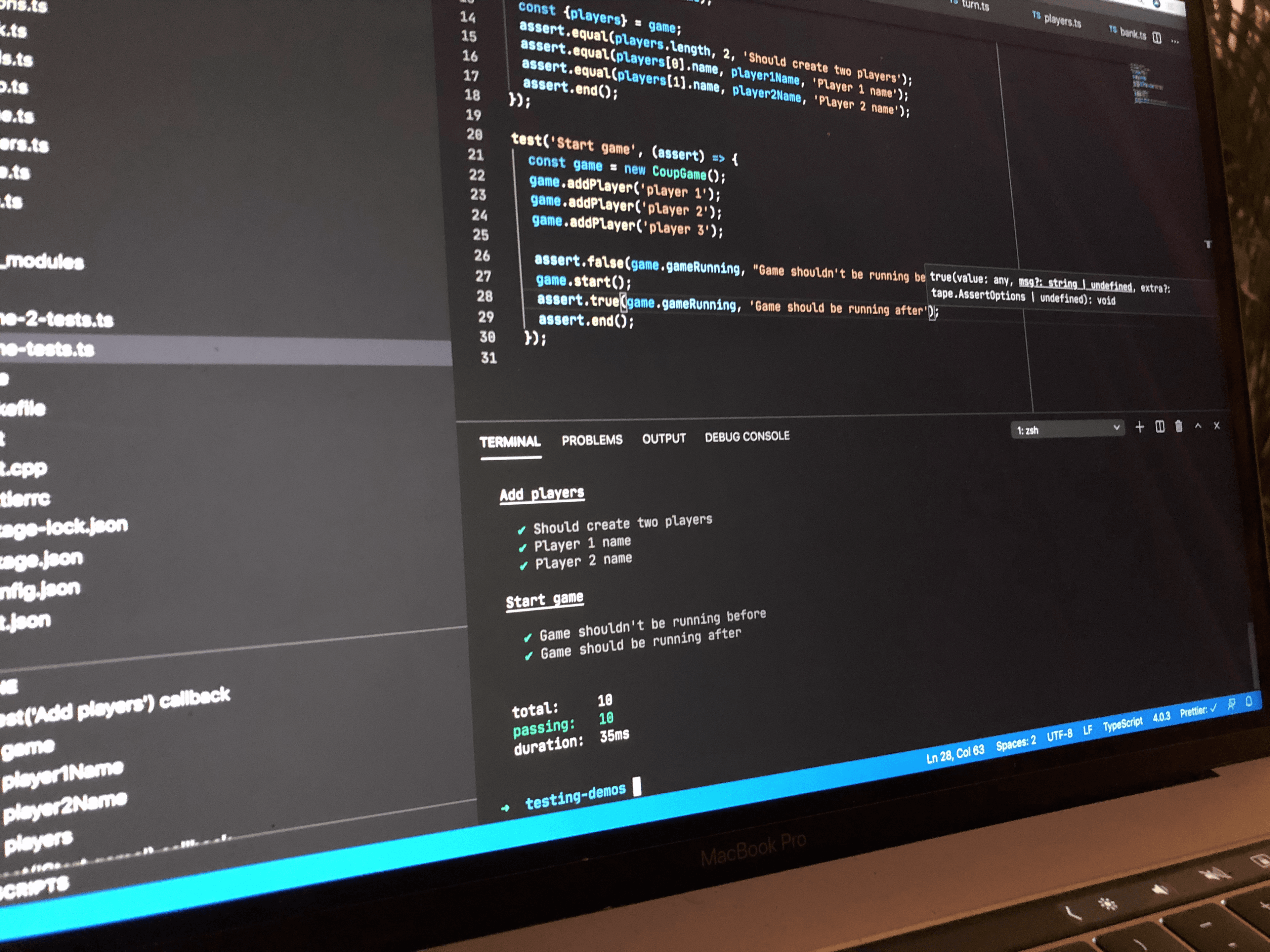

The initial reason we write tests is because it’s a convenient way to develop our code. We can add functionality, writing tests as we go to cover our tracks. Tests provide the scaffolding from which we can poke and prod our system in ways that would be difficult or impossible using manual testing. Unlike in construction, we can leave our scaffolding up!

But it’s later down the line when the true value starts to shine.

I like to think of good tests as a redundant power supply. With a redundant power supply, we can make changes to our system without any downtime. In the same way, we can make changes to code that is covered by tests with complete confidence. We can add features. We can refactor. We can bring other developers onto the project.

But what makes a good test? How can we write tests that are robust, focused, and fast?

Here’s my advice to write better tests.

Mindful testing

Mindfulness is about being fully present and aware in the moment. When you practice meditation, you practice awareness of your breath, your senses and how thoughts pop into your head.

What does this have to do with testing?

I think you can apply a similar approach to testing. Testing shouldn’t be a chore or an afterthought. As you write tests, you should be aware of what those tests are telling you about your code. This is the ‘Driven’ part of Test-Driven Development (TDD). Testing should be the principal tool that you use to guide the design of your code.

As you’re writing tests, become aware of those nagging doubts in the back of your head: Why is this so hard to test? Why does this thing have so many dependencies? It’s too complex to test properly!

These doubts are the tests trying to tell you that there are problems with the underlying code. Listen to those doubts and refactor.

Test code through its public interface

All code - whether it is a function, class, module, library, or framework - has a public interface. This is the contract for how the code is supposed to be used. This will likely go beyond the interface to particular functions. For example, a particular component might also depend on the layout of a file on disk, serialised data from a stream, or events from an event dispatcher.

We should focus our testing on the public interface, rather than providing back doors.

There are three main benefits to testing code through its public interface:

Firstly, it helps to make sure that the public interface is fit for purpose. A great interface is not only easy to use, but it’s hard to misuse. If a component is hard to use in tests, it’s probably hard to use as part of a system.

Secondly, it prevents flaky test failures when the implementation details change. A component should be within its rights to change implementation details without affecting anything that depends on it, including tests.

Finally, it enforces an appropriate level of refactoring. If there is a lot of functionality hidden in an implementation detail of a component, it might be a sign that there’s a hidden component that could be tested in its own right.

Treat tests as production code

As code evolves, so do tests. Tests will not remain static throughout their lifetime and it’s likely that they will have to change as the code underneath them changes.

For this reason, it’s best to treat the tests as production code. Don't copy and paste large swathes of code just because they're tests. Don't leave sections of code commented out just because they're tests. Don't introduce nasty hacks just because they're tests. Use your favourite buzzwords and acronyms: SOLID, DRY, YAGNI, SRP, KISS. That way, when you inevitably have to make changes to the tests, the impact will be minimal.

Of course, there are exceptions to this rule. Hardcoded constants are widely used as test inputs, for example.

Test one thing at a time

One of our goals for great tests is that a test failure gives a clear indication of where the error lies. To do this, we need to avoid false positives (failing tests when everything is okay) and false negatives (passing tests when there is a problem).

We can go a long way towards this by strictly testing one thing at a time. This means that everything that is required to test a component is set up and torn down for every test on that component.

How does this help us? It helps us avoid false positives. When there are complex tests that consist of lots of steps, intertwined with assertions, it can be very difficult to determine what’s gone wrong when the test fails. When there is little to no shared state between tests, this is less of a problem.

To help us, we can follow the pattern ‘Arrange, Act, Assert’. For every test, we first arrange our preconditions. Then, we perform some kind of action. Finally, we assert that the action had the effect we were expecting (this isn’t necessarily a single assertion). It’s important that, at this point, we don’t act again.

Sometimes it’s tempting to perform another test whilst the component is set up and ready for testing. When we face this situation, it’s better to refactor the setup so that it can be run it multiple times from multiple tests. That way, the tests are independent and their results are more meaningful.

Happy path first

It’s tempting to start testing by throwing garbage at your code, before standing back to admire how robustly it handles every combination of strange and unique input values. The problem is, once you go down the path of testing every possible input your code can receive, in every possible order, the combinatorial explosion becomes overwhelming. Most of these edge cases will never occur in practice, so the tests aren’t very valuable.

For that reason, start by focusing on the core use cases for the component. If every component had tests for its main use cases, you probably have a functioning system. The same can’t be said if every component could handle every possible input value.

Don’t write unit tests where a runtime assertion is more appropriate. If the code has clear preconditions, this doesn’t need to be checked with unit tests because the behaviour can be left undefined.

The only place where your code should be robust to error conditions is at its external boundaries: reading from files, reading from the network, dealing with user input etc. Internally, within a system, it’s reasonable to assume that calling code will be a good citizen.

Add tests for bugs found in the wild

When users report errors, the first thing you should do is write a test to verify the bug. If you’ve already found a fix for the problem you should commit the fix, back out the fix, write a test to expose the bug, then reintroduce the fix.

The benefit of this is not only to prevent the bug recurring - although that is clearly valuable.

The benefit of this is to understand the gaps in your current testing strategy. Was it a simple oversight? Are there other similar tests missing? Was it possible to have a test for this? If not, why not?

The feedback cycle from bugs that we find in the wild is the final part of the loop. We can use this feedback to reflect on the efficacy of our tests and focus on what’s important.

Cut waste

Redundant tests are tests that don’t contribute any value. A classic example is a setter and a getter. If there is no extra logic behind setting and getting a value, there is no need to write a test, purely for the sake of code coverage.

We should be vigilant to tests that make the same assumptions as the code underneath. Writing tests like this can make us feel better, but don’t give us the benefits that we’re looking for.

There is no reason to simply repeat the implementation in tests: focus on tests that add value. Avoid the myth that more is better.

Test in the right place

We write tests at different levels of granularity. Unit tests test an individual component or unit, usually mocking it dependencies. Acceptance (or integration) tests check code fulfils its requirements and will usually involve the interaction between multiple components. End-to-end testing exercises the entire system.

As we move up the testing hierarchy, tests become more relevant to a user’s goals, but harder to write, harder to debug and slower to run.

The optimal testing mix will vary by project and task. Sometimes a specific component is critical so will be thoroughly tested in isolation in unit tests. Other times the interaction between several components is more important so acceptance tests are more appropriate. Occasionally, you can only get confidence from testing the whole system.

From time to time, it’s important to reflect on the testing mix for a project. Does it verify the user’s goals? Are the tests easy to write and debug? Do the tests run quickly enough or often enough?

You can use the answers to these questions to guide the balance between types of tests. This way, you can extract the most value from every test that is written.

This is not an exhaustive list for how to write perfect tests. There are lots of other challenges not mentioned here (mocking, use of random, user interface testing, dealing with time, code coverage, performance, hardware, multithreading). I hope that keeping some of this advice in mind will guide you towards writing better tests.

Also posted on Medium

Published: 2020-10-15